Abstract

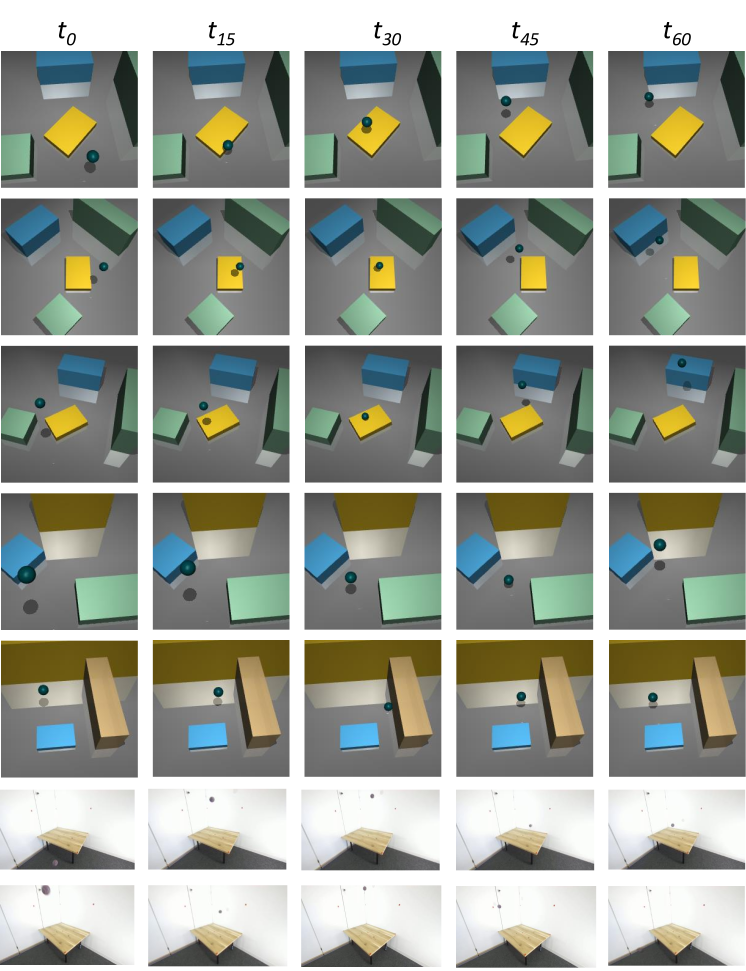

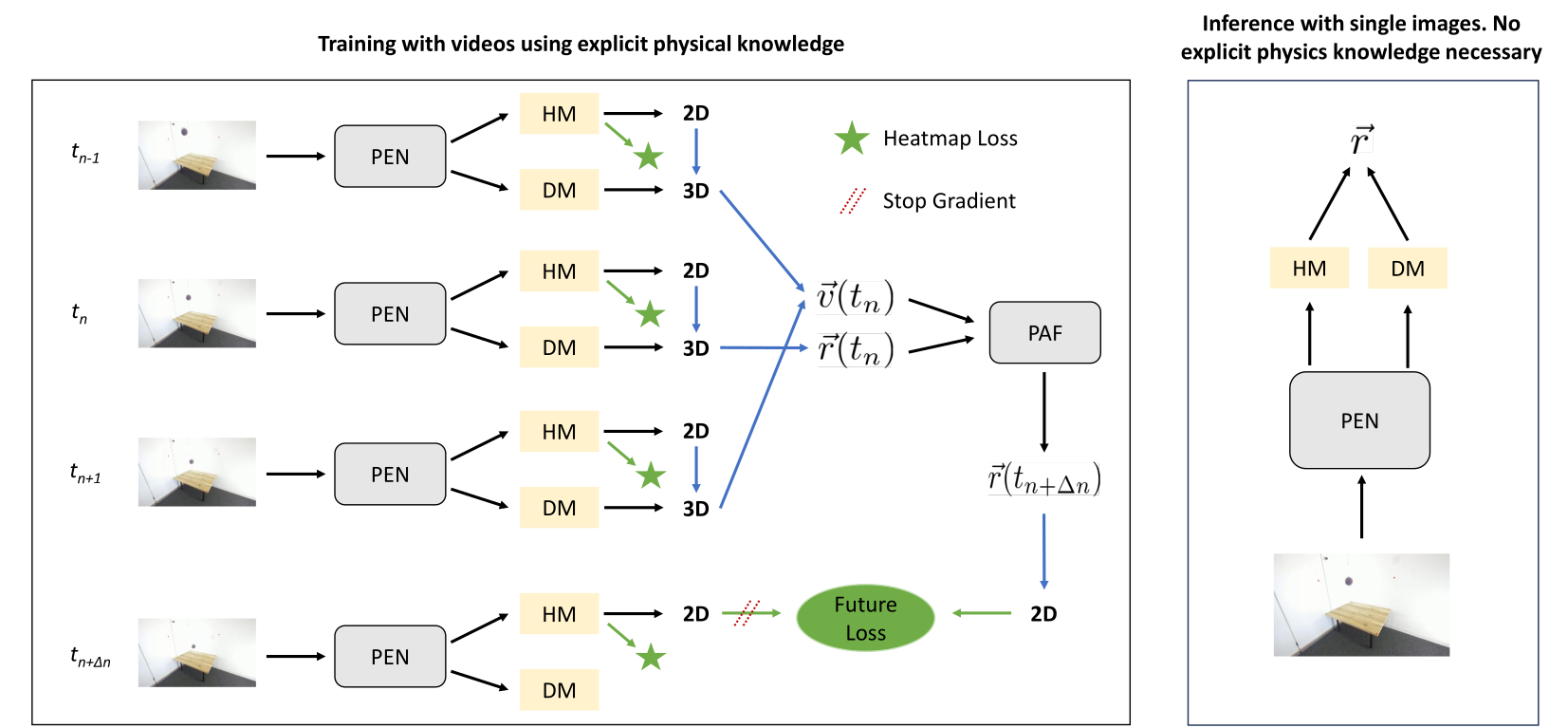

We present a novel method for precise 3D object localization in single images from a single calibrated camera using only 2D labels. No expensive 3D labels are needed. Thus, instead of using 3D labels, our model is trained with easy-to-annotate 2D labels along with the physical knowledge of the object's motion. Given this information, the model can infer the latent third dimension, even though it has never seen this information during training. Our method is evaluated on both synthetic and real-world datasets, and we are able to achieve a mean distance error of just 6 cm in our experiments on real data. The results indicate the method's potential as a step towards learning 3D object location estimation, where collecting 3D data for training is not feasible.

Links

Our paper was accepted at International Conference on 3D Vision 2024. Find the code, dataset and weights below

- Paper PDF

- Poster

- Code on GitHub

- Dataset (see manual on GitHub), Weights

Citation

If you find this paper helpful, please consider citing:

@article{kienzle3dv2024,

author = {Daniel Kienzle and Julian Lorenz and Katja Ludwig and Rainer Lienhart},

title = {Towards Learning Monocular 3D Object Localization From 2D Labels Using the Physical Laws of Motion},

journal = {Proceedings of the International Conference on 3D Vision 2024 (3DV)},

year = {2024},

}

License

The structure of this page is taken and modified from nvlabs.github.io/eg3d which was published under the Creative Commons CC BY-NC 4.0 license .